Video surveillance readings

From UCLA_dataset

Notice: please feel free to edit any information on this page. The spirit of wiki page is that everyone can share his/her opinion and information. Here is how to edit a wiki page. You need first register to be a user by the login/create user button, then click the "edit" button where you would like to write.

To enable more convenient paper discussion, we can link every title to a new page. For example, "Camera Calibration from Video of a Walking Human" (see Calibration section). It is in red because the new page hasn't been edited yet. By clicking on it, you can edit the new page.

Contents |

Video Surveillance

We may use the following categorization of topics in video surveillance, similar to this image. Some topics are of particular interest, such as camera calibration, synchronizing multiple cameras, tracking objects in video and event/action recognition. We will also review the competitions and methods in the celebrated Trecvid benchmark.

| perspective | categorization |

|---|---|

| camera type | single image |

| video from fixed camera | |

| video from moving camera | |

| video from multiple cameras | |

| level of processing | low-level processing: motion segmentation, region segmentation, edge features etc |

| detection | |

| tracking | |

| activity recognition |

Calibration

| Author/Date/Source | Title | Content | Project page |

|---|---|---|---|

| F. Lv et. al. PAMI 2006 pdf | Camera Calibration from Video of a Walking Human | A self-calibration method to estimate a camera's intrinsic/extrinsic parameters from vertical line segments of the same height. Proposes an algorithm to obtain the needed line segments by detecting the head and feet positions of a walking human in his leg-crossing phases is described. Experimental results show robust results under various viewing angles and different subjects. | N.A |

| Xu, R et. al. ICPR 2008 pdf | A Computer Vision Based Camera Pedestal's Vertical Motion Control | Proposes an automatic procedure mimicking the human camera operator. Experiments to control the vertical motion of a pedestal by leveling its position with a human head or a tracked hand-held object. | N.A |

Single-camera tracking

Benchmarks

| Author/Date/Source | Title | Content | Project page |

|---|---|---|---|

| R. Collins et. al. PETS'05 pdf | An Open Source Tracking Testbed and Evaluation Web Site | Implemented a GUI testbed (openCV based) for tracking objects (e.g., car) in video. The source code and data seem to be private. | html |

Multi-camera tracking

News!. NIST recently posted a Multi-camera tracking challenge (see this). The challenge will be sponsored in the 6th Advanced Video and Signal Based Surveillance (AVSS) IEEE Conference.

| Author/Date/Source | Title | Content | Project page |

|---|---|---|---|

| K. Kim and L. Davis, ECCV'06 pdf | Multi-camera (multi-view), multi-hypothesis, multy-target segmentation and tracking | An efficient automatic technique for tracking multiple targets from multiple cameras (or views) becomes valuable for law enforcement, military and commercial applications. A multi-view multi-hypothesis approach to segmenting and tracking multiple persons on a ground plane is proposed. The tracking state space is the set of ground points of the people being tracked. During tracking, several iterations of segmentation are performed using information from human appearance models and ground plane homography. | N.A. |

| J. Berclaz et. al. ECCV'08 pdf | Multi-Camera Tracking and Atypical Motion Detection with Behavioral Maps | A framework dedicated to incident detection based on a multi-camera setup. Goal is two-fold: 1. Processing the output of several cameras in order to handle occlusions among people and their environment and provide us with more robust people detection and tracking strategies; 2. Capturing the motion of a person or a group of people in order to make the interpretation of abnormal behaviors much easier. | video demos |

| F. Fleure et. al. PAMI'08 pdf | Multi-Camera People Tracking with a Probabilistic Occupancy Map | ||

| C. Loy et. al. CVPR'09 pdf | Multi-Camera Activity Correlation Analysis | A novel Cross Canonical Correlation Analysis (xCCA) framework is formulated to detect and quantify temporal and causal relationships between regional activities within and across camera views. The approach accomplishes three tasks: (1) estimate the spatial and temporal topology of the camera network; (2) facilitate more robust and accurate person re-identification; (3) perform global activity modelling and video temporal segmentation by linking visual evidence collected across camera views. | html |

Object Identification through multiple cameras

Object identification (OID) is specialized recognition where the category is known (e.g. cars) and the algorithm recognizes an object's exact identity (e.g. Bob's BMW). Two special challenges characterize OID. (1) Inter-class variation is often small (many cars look alike) and may be dwarfed by illumination or pose changes. (2) There may be many classes but few or just one positive training examples per class. Due to (1), a solution must locate possibly subtle object specific salient features (a door handle) while avoiding distracting ones (a specular highlight). However, (2) rules out direct techniques of feature selection.

| Author/Date/Source | Title (Discussion) | Content | Project page |

|---|---|---|---|

| A. Ferencz and E. G. Learned-Miller and Jitendra Malik, ICCV'05 pdf IJCV'08 pdf | Learning to Locate Informative Features for Visual Identification | Describes an on-line algorithm that takes one model image from a known category and builds an efficient same vs. different classification cascade by predicting the most discriminative feature set for that object. This method not only estimates the saliency and scoring function for each candidate feature, but also models the dependency between features, building an ordered feature sequence unique to a specific model image, maximizing cumulative information content. Learned stopping thresholds make the classifier very efficient. To make this possible, category-specific characteristics are learned automatically in an off-line training procedure from labeled image pairs of the category, without prior knowledge about the category. Our method, using the same algorithm for both cars and faces, outperforms a wide variety of other methods. | html |

| Ying Shan and Harpreet S. Sawhney and Rakesh Kumar, CVPR'05 pdf PAMI'08 pdf | Unsupervised Learning of Discriminative Edge Measures for Vehicle Matching between Nonoverlapping Cameras | This paper proposes a novel unsupervised algorithm learning discriminative features in the context of matching road vehicles between two nonoverlapping cameras. The matching problem is formulated as a same-different classification problem, which aims to compute the probability of vehicle images from two distinct cameras being from the same vehicle or different vehicle(s). We employ a novel measurement vector that consists of three independent edge-based measures and their associated robust measures computed from a pair of aligned vehicle edge maps. The weight of each measure is determined by an unsupervised learning algorithm that optimally separates the same-different classes in the combined measurement space. This is achieved with a weak classification algorithm that automatically collects representative samples from same-different classes, followed by a more discriminative classifier based on Fisher’s Linear Discriminants and Gibbs Sampling. The robustness of the match measures and the use of unsupervised discriminant analysis in the classification ensures that the proposed method performs consistently in the presence of missing/false features, temporally and spatially changing illumination conditions and systematic misalignment caused by different camera configurations. Extensive experiments based on real data of more than 200 vehicles at different times of the day demonstrate promising results. | N.A. |

| Yanlin Guo and Steve Hsu and Harpreet S. Sawhney and Rakesh Kumar and Ying Shan, PETS'05 pdf PAMI'08 | Robust Object Matching for Persistent Tracking with Heterogeneous Features | Presents a unified framework that employs a heterogeneous collection of features such as lines, points and regions for robust vehicle matching under variations in illumination, aspect and camera poses. It fully utilizes the characteristics of vehicular objects that consist of relatively large textureless areas delimited by line like features, and demonstrates the important important usage of heterogeneous features for different stages of vehicle matching. | N.A. |

| Yanlin Guo and Cen Rao and S. Samarasekera and J. Kim and R. Kumar and H. Sawhney, CVPR'08 pdf | Matching vehicles under large pose transformations using approximate 3D models and piecewise MRF model | Presents a robust object recognition method based on approximate 3D models that can effectively match objects under large viewpoint changes and partial occlusion. The specific problem is: given two views of an object, determine if the views are for the same or different object. A key contribution of this approach is the use of approximate models with locally and globally constrained rendering to determine matching objects. It utilizes a compact set of 3D models to provide geometry constraints and transfer appearance features for object matching across disparate viewpoints. The closest model from the set, together with its poses with respect to the data, is used to render an object both at pixel (local) level and region/part (global) level. Especially, symmetry and semantic part ownership are used to extrapolate appearance information. A piecewise Markov Random Field (MRF) model is employed to combine observations obtained from local pixel and global region level. Belief Propagation (BP) with reduced memory requirement is employed to solve the MRF model effectively. No training is required, and a realistic object image in a disparate viewpoint can be obtained from as few as just one image. | N.A.

|

| E Nowak and F Jurie , CVPR'07 pdf | Learning Visual Similarity Measures for Comparing Never Seen Objects | Proposes and evaluates an algorithm that learns a similarity measure for comparing never seen objects. The measure is learned from pairs of training images labeled same or different. This is far less informative than the commonly used individual image labels (e.g. car model X), but it is cheaper to obtain. The proposed algorithm learns the characteristic differences between local descriptors sampled from pairs of same and different images. These differences are vector quantized by an ensemble of extremely randomized binary trees, and the similarity measure is computed from the quantized differences. The extremely randomized trees are fast to learn, robust due to the redundant information they carry and they have been proved to be very good clusterers. Furthermore, the trees efficiently combine different feature types (SIFT and geometry). | N.A. |

Recognize Activities from Video

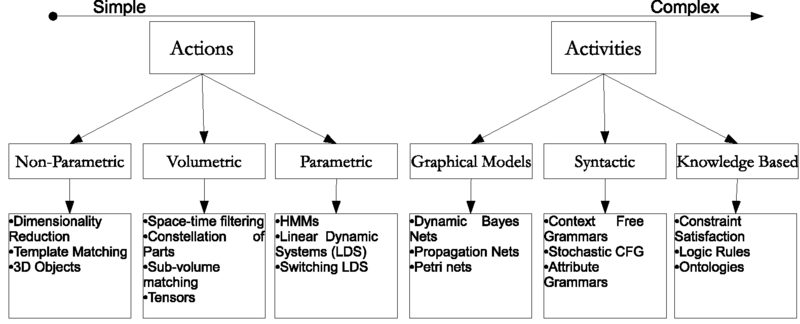

The past decade has witnessed a rapid proliferation of video cameras in all walks of life and has resulted in a tremendous explosion of video content. Several applications such as content-based video annotation and retrieval, highlight extraction and video summarization require recognition of the activities occurring in the video. The analysis of human activities in videos is an area with increasingly important consequences from security and surveillance to entertainment and personal archiving. A paper named Recognition of Human Activities: A survey by P. Uuraga and R. Chellappa provides a comprehensive review of efforts in the past couple of decades to address the problems of representation, recognition and learning of human activities from video and related applications. As shown in the following figure, they divide the problem into two major levels of complexity: a) actions and b) activities. Actions are characterized by simple motion-patterns typically executed by a single human. Activities are more complex and involve coordinated actions among a small number of humans. Following the We shall introduce related work for both these two

| Author/Date/Source | Title | Content | Project page |

|---|---|---|---|

| Y. Ke et. al. ICCV'07 pdf | Event Detection in Cluttered Videos | Explores the use of volumetric features for event detection. Proposes a novel method to correlate spatio-temporal shapes to video clips that have been automatically segmented. The method works on over-segmented videos, and background subtraction is not needed for reliable object segmentation. The project webpage includes a series of work from 05 to 07. | html |

Space-time manifold.

| Author/Date/Source | Title | Content | Project page |

|---|---|---|---|

| Y. Wexler and D. Simakov, ICCV 2005 pdf | Space-Time Scene Manifolds | A method to cut the space-time volume without incurring visual artifacts or distortions. In the manifold, any small part of it can be seen in some image even though the manifold spans the whole video. Posed as a shortest path in a graph and thus the globally optimum can be found efficiently. It can handle both static and dynamic scenes, with or without parallax. | results and codes |

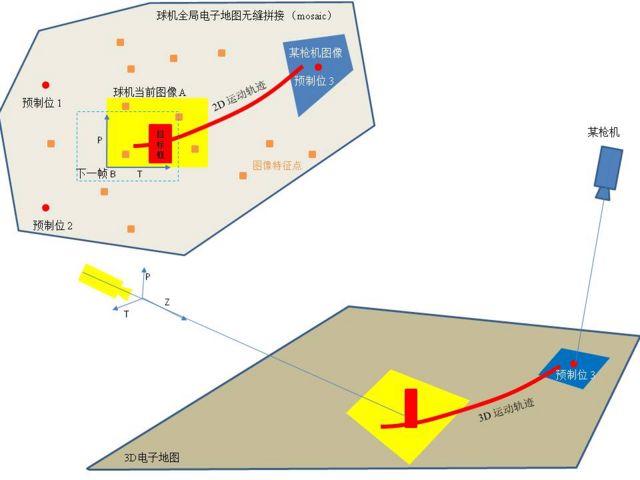

Scene Mosaic

Video Retrieval Benchmark

Trecvid is an important benchmark for video surveillance, video retrieval, high-level feature extraction etc. Previously there was also an "shot boundary detection" task that cuts long video into short clips. Trecvid is an annual competition, and a workshop is organized which summarizes submitted methods and results. The publications for Trecvid2008 is can be found here in which 77 teams participated.

Data. The database includes 400 hours of news video, documentary video etc.